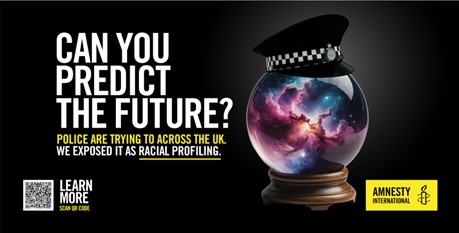

Transport for London (TfL) has rejected Amnesty International’s advert about predictive policing for Elephant and Castle tube station.

TfL are understood to have rejected the advert on the grounds it may bring other members of the Greater London Authority Group into disrepute.

The advert prompts commuters to read Amnesty’s Automated Racism: How Police Data and Algorithms Code Discrimination into Policing report.

Amnesty International UK’s racial justice policy and research director lead Ilyas Nagde said: “All this is the compounding of discrimination.”

“[It’s] giving people access to information about the way in which a system is operating in their communities…that’s not necessarily seeking to bring anyone into disrepute.

“It’s about giving people information.”

Predictive policing is the use of automated technology to forecast where, when and who may be involved in a crime.

Amnesty called on Mayor of London Sadiq Khan to reverse the rejection of the advert to ensure predictive policing does not have an effect on campaign organisations or residents of south London.

Nagde explained how it can lead to someone modifying their behaviour, such as not going to a certain area where predictive policing is in operation, due to the fear of a negative response.

Nagde said: “We are calling on the chair of TfL, Mayor Sadiq Khan, to not be complicit in this cover-up by preventing police transparency.”

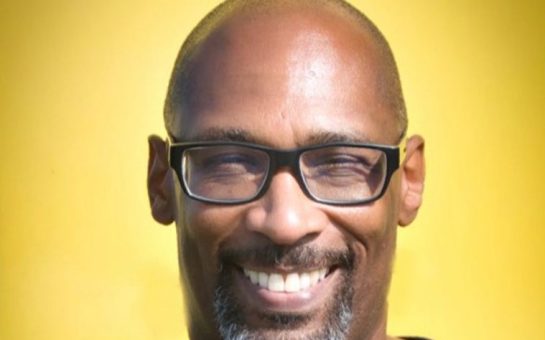

John Pegram discovered he was risk-scored using predictive policing technology in 2024.

Pegram said he felt like he had gone back in time to the 1990s, when he claims was being regularly stopped and searched by police.

He said: “It’s impacted my life and business.

“I have PTSD because of what’s happened to me.

“I think the attention I’ve had off the police, and there is a clear point of attention, could easily be attributed to the offender management app and the risk score I have been given, which is a standard risk rating.

“It deepens wounds, and it makes you feel like you’re being criminalised.”

StopWatch, a charity addressing the use of stop and search powers, are concerned predicting policing risks infringing on the right to the presumption of innocence.

StopWatch policy advocacy lead Jodie Bradshaw said: “Predictive policing is a subjective projection of a crime which you may or may not commit, there is no presumption of innocence.”

The Metropolitan Police had the highest rate of stop and search encounters of any ethnic group for people of black ethnic appearance per 1,000 population in 2023 .

Bradshaw said: “If you’re black, you’re more likely to end up on the police national database even if you’re innocent, and then you’re more likely to be flagged by predictive policing technologies for a crime that you haven’t committed.

“But they reckon maybe there’s a chance that you could commit a crime in the future.”

Amnesty’s report made three recommendations, including a prohibition on predictive policing systems.

They called for transparency obligations on data-based and data-driven systems being used by authorities, including a publicly accessible register with details of systems used as well.

Amnesty also want accountability obligations, including a right and a clear forum to challenge a predictive, profiling, or similar decision or consequences leading from such a decision.

TfL, the office for the Mayor of London, and the Metropolitan Police were all contacted for comment.

Feature image: Amnesty International UK.

Join the discussion